An amendament to bertology

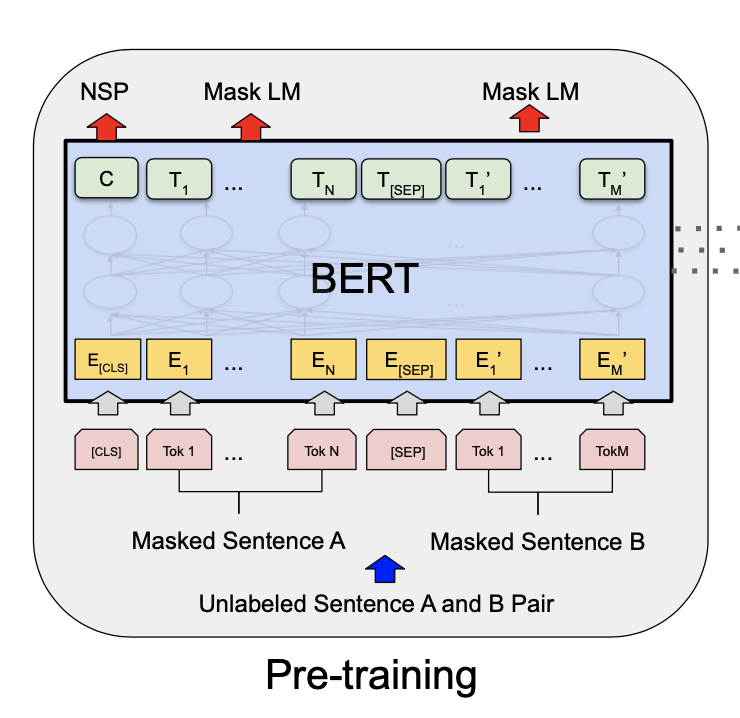

BERT architecture

For this project we proposed a brand new method to study Bert models’ ability to utilize numeracy in several tasks, namely, classification and numeric-related question answering. RoBerta is a variant of the Bert model that was developed by Facebook AI. We compare roBerta model’s performance on the original dataset and on a customized dataset where all numbers are masked with a special